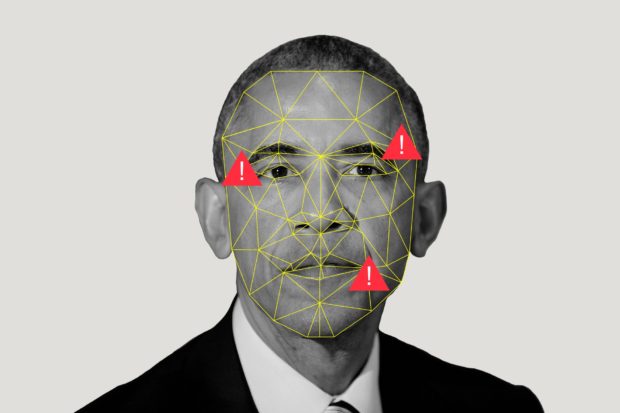

Deepfake: Porn and Politics Made by Artificial Intelligence

So-called “deepfake” videos are proliferating online, with most of them pornographic but with some politically motivated ones as well, security researchers said Monday.

A report by the security firm Deeptrace identified 96 percent of deepfakes as “nonconsensual pornography,” which uses the images of female celebrities or others in videos manipulated using artificial intelligence.

https://www.instagram.com/p/B291LYUlfuh/?utm_source=ig_web_copy_link

What Does Deepfake Mean?

The researchers found the number of videos almost doubling over the last seven months to 14,678.

The report said four websites dedicated to deepfake pornography received more than 134 million views, which “demonstrates a market for websites creating and hosting pornography, a trend that will continue to grow unless decisive action is taken,” said Deeptrace chief executive Georgio Patrini in a blog post.

Patrini said deepfakes are also “making a significant impact on the political sphere,” noting cases in Gabon and Malaysia targeting political leaders.

“These examples are possibly the most powerful indications of how deepfakes are already destabilizing political processes,” he said.

Patrini said some new cases are emerging “where synthetic voice audio and images of non-existent, synthetic people were used to enhance social engineering against businesses and governments.”

The report comes amid growing concerns that deepfakes could be used to manipulate voters ahead of elections in the United States and elsewhere.

Big social networks have been stepping up efforts to detect and thwart the distribution of manipulated videos but analysts say it is increasingly difficult to stop the spread of deepfakes.